HTTP Load Balancer on Top of WSO2 Gateway — Part 1: Project Repository, Architecture and Features

It’s almost four months and it has been an amazing journey! At this point, I would like to thank my mentors Isuru Ranawaka and Kasun Indrasiri and WSO2 community members especially Senduran and Isuru Udana for continuously mentoring, supporting and guiding me throughout this project.

It’s almost four months and it has been an amazing journey! At this point, I would like to thank my mentors Isuru Ranawaka and Kasun Indrasiri and WSO2 community members especially Senduran and Isuru Udana for continuously mentoring, supporting and guiding me throughout this project.

Google announced list of accepted mentoring organizations and I was looking for projects related to Networking and Java. I came across WSO2’s idea list and was pretty excited to see HTTP Load Balancer as a project idea. I had good idea on load balancers and was eager to get into the internals of it and develop one. So I contacted my mentors and they gave me idea on getting started with WSO2 stack. They asked me to come up with set of features that I am willing to develop as part of this project. I also got great help and guidance from WSO2 community members right from the time of writing proposal. With their guidance and suggestions, I was able to come up with basic architecture, set of features and a tentative timeline.

Once selected project proposals were announced, my mentor gave clear idea on what is expected and how to proceed with the project. Here are my previous blog posts on community bonding period and mid term evaluations. I’ve completed all the features that I had committed in my project proposal. Based on the performance benchmarks (will be discussed in next post) that I have done, this Load Balancer is performing better than Nginx (Open Source Version). My mentors are also happy with the outcome. There is lot more to be done to take this LB to production like performance improvisation, ability to function in multi-level mode, etc., and moreover the underlying Carbon Gateway Framework is continuously evolving. Even after GSoC period, I’ll be contributing to this project and make it production ready.

In this post, I’ll be discussing High Level Architecture, Engine Architecture, Message Flow and Load Balancer specific features.

Note: HTTP Load Balancer on Top of WSO2 Gateway is referred as GW-LB.

Project Repository

This GSoC project has been added to WSO2 Incubator and I’ve been given membership to WSO2 Incubator Organization :D !

Since GW-LB has standalone run-time, it is developed and managed as a separate project. You can also find the project in my personal repository from which it has been added to WSO2 Incubator.

Carbon Gateway Framework with ANTLR grammar support for LB can be found here. As DSL and gateway framework are evolving, there will be some changes to grammar in future. Please find my commits for handling LB specific configurations and ANTLR grammar support here.

README file has instructions to build and work with the product. You could also simply extract this file and try it out !

High Level Architecture

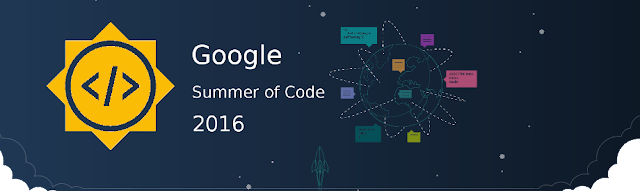

WSO2 Gateway Framework is a high performance, lightweight, low-latency messaging framework based on standard gateway pattern. Its Netty based non-blocking IO and Disruptor (ring-buffer) architecture makes it the fastest open-source gateway available. Benchmarks[1] show that the performance of gateway is very high when compared to other solutions and is close to the direct netty based backend (without any intermediate gateway).

GW-LB makes use of WSO2’s Carbon Gateway Framework, Carbon Transports and Carbon Messaging. These are highly modular and easily extensible as they are OSGi bundles and are part of WSO2 Carbon Platform. This LB by itself is an OSGi bundle and it is built on to of carbon gateway framework. When all these bundles are bundled together along with Carbon Kernel it forms GW-LB Server.

- Carbon gateway framework provides configuration management and basic mediation capabilities.

- Carbon transports acts as transport layer within WSO2 stack.

- Within WSO2 stack, Messages (Requests / Response) are mediated in the form of Carbon Messages.

When a request reaches Carbon Transports (WSO2-Netty Listener) additional layer required for mediation is added and it becomes Carbon Message. Similarly, after mediation when Carbon Message reaches Carbon Transports (WSO2-Netty Sender), all Carbon Message related details will be removed and message is sent to corresponding endpoint. It works similarly when response arrives from back-end. This flow is clearly shown in Figure 1.

Figure 1: High Level Architecture

Engine Architecture

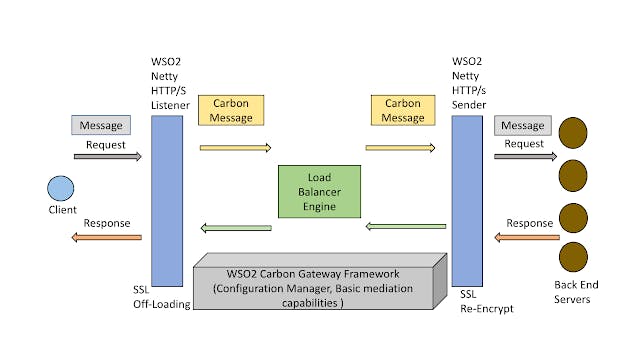

This LB has been built by keeping in mind the modular and extensible nature of WSO2 product stack. WSO2 uses ANTLR4 to develop domain specific language (DSL) for its carbon gateway framework. This DSL will be used to configure and define mediation rules for various products built using this framework including this LB.

The Gateway Framework and DSL are continuously evolving and LB Engine is completely decoupled from the DSL. Also, developers can easily develop their own LB algorithms and persistence policies and plug it into this LB.

Figure 2 clearly depicts the modules that are specific to LB Engine, Carbon Gateway Framework and Carbon Transports.

Figure 2: Engine Architecture

Message Flow

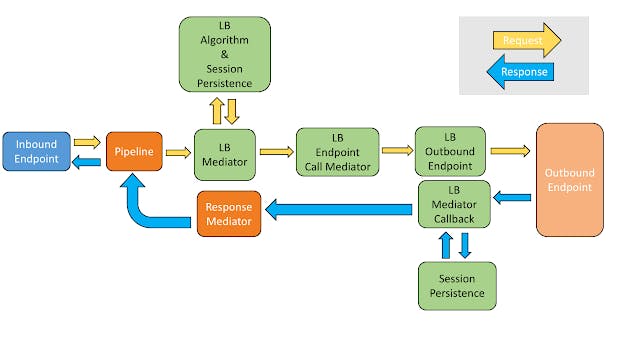

As mentioned above, modules within WSO2 stack communicate via Carbon Messages. Refer Figure 3 to get clear idea on how message flows through various LB modules.

Request Flow: From Client -> LB -> Back-End

- When a client’s request reaches WSO2-Netty Listener it gets transformed to Carbon Message. This carbon message then reaches Inbound Endpoint.

- This carbon message then flows via Pipeline and reaches ‘LB Mediator’.

- Each LB Outbound Endpoint has its own LB Endpoint Call Mediator. LB Mediator uses this LB Endpoint Call Mediator to forward request to the corresponding LB Outbound Endpoint.

- If there is no persistence policy, LB Algorithm returns the name of LB Outbound Endpoint to which LB Mediator has to forward the request.

- If there is any persistence policy, LB Mediator takes appropriate action (discussed later) and finds the name of LB Outbound Endpoint.

- ‘LB Mediator’ then passes the carbon message to ‘LB Endpoint Call Mediator’.

- This ‘LB Endpoint Call Mediator’ creates a ‘LB Mediator Call Back’ and forwards the carbon message to ‘LB Outbound Endpoint’ which in-turn forwards message to ‘Outbound Endpoint’.

- ‘Outbound Endpoint’ then forwards carbon message to back-end service.

- When carbon message reaches WSO2-Netty Sender, it transforms carbon message back to original client request and sends it to the corresponding back-end service.

Figure 3: Message Flow

Response Flow: From Back-End -> LB -> Client

- When response from back-end reaches WSO2-Netty Sender, it gets transformed to Carbon Message and then its corresponding LB Mediator Callback is invoked.

- Based on the configured session persistence policy, LB Mediator Callback takes corresponding action required for session persistence and forwards carbon message to Response Mediator.

- The Response Mediator then forwards message to Pipeline which in-turn forwards message to Inbound Endpoint.

- Inbound Endpoint then forwards message to corresponding client.

- When carbon message reaches WSO2-Netty Listener, it transforms carbon message back to original back end response and sends it to the corresponding client.

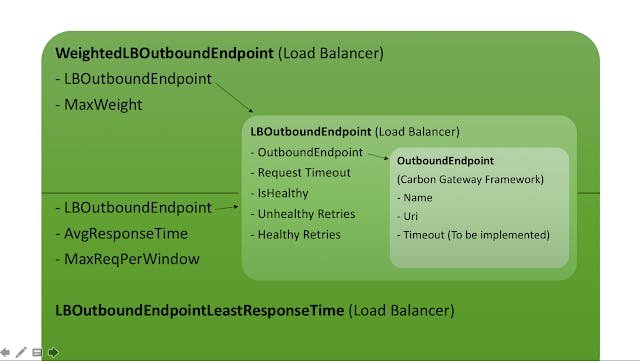

Outbound Endpoints

Back end service’s endpoints are mapped as Outbound Endpoints in Carbon Gateway Framework. LB Engine requires few additional attributes for load balancing these Outbound Endpoints. Figure 4 clearly explains the differences between Outbound Endpoint, LB Outbound Endpoint, Weighted LB Outbound Endpoint and LB Outbound Endpoint for Least Response time algorithm.

Figure 4: Different Outbound Endpoints in GW-LB

Features

This LB supports various load balancing algorithms, session persistence policies, health checking and redirection.

Algorithms

This LB supports both weighted and non-weighted algorithms. In non-weighted algorithms, all Outbound endpoints are considered to be of equal weights. In weighted algorithms, weights can be configured to each and every Outbound Endpoint. If no weight is specified for an endpoint, default value of 1 will be taken as its weight.

Non-Weighted (Simple) Algorithms

1) Round-Robin

LB Mediator forwards requests to Outbound Endpoints in a Round-Robin fashion. If there is any persistence policy, LB Mediator forwards request to the Outbound Endpoint based on it.

2) Random

LB Mediator forwards request to Outbound Endpoints in Random fashion. If there is any persistence policy, LB Mediator forwards request to the Outbound Endpoint based on it.

3) Strict Client IP Hashing

LB looks for clients IP address in incoming request header (request headers will be available in Carbon Message). As of now, LB looks for the following headers:

a) X-Forwarded-For

b) Client-IP

c) Remote-Addr

But these are always configurable and more headers can be added to look for if necessary. If LB cannot retrieve Client’s IP or if that IP is not a valid one, LB will send internal server error response to the client. In this algorithm mode, persistence policy should be NO_PERSISTENCE and request will be load balanced only if a valid Client IP is available.

Also, LB uses scalable and efficient consistent hashing over simple modulo hashing.

Advantage of using consistent hashing is that if a particular node is down, only those clients that maintained session with that node are to be remapped to other nodes. Session persistence or affinity of other clients are not affected. When that node is up and back to healthy state, only those clients that were remapped, will be mapped back to this node. But if we use modulo hashing, all the clients will be remapped and session will be lost, which creates bad user experience.

4) Least Response Time

Running average is calculated for endpoints on each and every request. After a fixed WINDOW number of requests are elapsed and load distribution for endpoints will be decided based on their response time. Higher the response time of an endpoint, higher the load on it. So, LB always tries to reduce the response time of an endpoint by forwarding fewer number of requests to it and by forwarding more requests to endpoint with least response time. By doing this, LB achieves even load distribution based on the response time of endpoints.

Weighted Algorithms

1) Weighted Round-Robin

LB Mediator forwards requests to Outbound Endpoints in a Round-Robin fashion by considering endpoint’s weights. For example, if endpoints A, B, C have weights of 3, 2, 5 respectively. In a total of 10 requests..

a) First 3 requests goes to endpoints A, B, C.

b) Second 3 requests also goes to endpoints A, B, C. Now, 2 requests have been forwarded to endpoint B. So it will not be considered until a total of 10 requests are elapsed.

c) Endpoint A receives next request. Now, 3 requests have been forwarded to endpoint A. So it will not be considered until a total of 10 requests are elapsed.

d) Remaining 3 requests will be forwarded to Endpoint C. No a total of 10 requests have elapsed.

The cycle begins again.

Since endpoints are weighted and weights represent processing power, requests forwarded to endpoints based on persistence policy will also be taken into account.

2) Random

Similar to weighted round-robin but order of endpoints are chosen in random manner.

Easily Extensible Nature

Custom Load Balancing Algorithms (Simple or Weighted) can be easily written by implementing corresponding interfaces.

Session Persistence

Client IP Hashing, Application Cookie and LB Inserted cookie are the three persistence policies supported by this LB as of now.

1) Client IP Hashing

Similar to Strict IP Hashing algorithm, but the only difference is that if LB couldn’t find a valid Client IP in request header, the request will be still load balanced based on the configured load balancing algorithm. It also uses scalable Consistent Hashing over modulo hashing.

2) Application Cookie

LB inserts its own cookie inside Application server inserted cookie. So when client sends request, LB will be looking for cookie in the specified format (LB inserted cookie) and based on the cookie’s value, request will be forwarded to the corresponding back-end and persistence is maintained. And LB also removes the cookie inserted by it before forwarding the request to the Outbound endpoint.

Cookie expiration value is controlled by back-end application and not LB. If there is no cookie available in response sent by the back-end, LB will insert its own cookie for the sake of maintaining persistence. This cookie will be a session cookie. i.e., session persistence will be maintained till the client’s browser is open. Once it is closed, persistence will be lost. Also, this custom cookie inserted by LB will be removed before request is forwarded to the client.

3) LB Inserted Cookie

This persistence policy will come in handy when back-end application service is not inserting cookie but persistence has to be maintained. It works similar to that of Application cookie, but the only difference is that inserted cookie is a session cookie.

Health Checking and Redirection

This LB supports both active and passive health checking mode. If health checking is not necessary it can also be disabled. Passive health checking is the default mode as it doesn’t introduce any additional overhead on back-end services or networks. Be it active or passive health checking, the following parameters are required.

a) Request Timeout: Time interval after which, request has to be marked as timed out if response is not received.

b) Health Check Interval: Time interval between two health checks

c) Unhealthy Retries: Number of times the request has to continuously fail (timeout) before marking an endpoint as unHealthy.

d) Healthy Retries: Number of times LB should be able to successfully establish connection to server’s port before marking it back to healthy.

- For each Timed-out request LB will send “HTTP Status Code: 504, Gateway Timeout”.

- If all Outbound endpoints are unhealthy and are unavailable LB will send “HTTP Status Code: 503, Service Unavailable”.

Passive Health Check

In this mode, LB doesn’t send any additional connection probes to check whether an endpoint is healthy or not. It simply keeps track of consecutive failed (timed-out) requests to an endpoint. If Unhealthy retries count is reached, that endpoint will be marked as unhealthy and no more requests will be forwarded to that endpoint until it is back to healthy state.

Active Health Check

LB will be periodically sending connection probes to check whether an endpoint is healthy or not. In this case both consecutive failed requests and consecutive failed connection probe to an endpoint wil be taken into account. If Unhealthy retries count is reached, that endpoint will be marked as unhealthy and no more requests will be forwarded to that endpoint until it is back to healthy state.

BackToHealthyHandler

BackToHealthyHandler is a thread that is scheduled to run after time interval every “Health Check Interval” is elapsed. It sends connection probe to unhealthy endpoints and tries to establish connection. If it succeeds in establishing connection for healthy retries number of times, that endpoint will be marked as healthy again and requests will be forwarded to that endpoint.

In my next post, I’ll be discussing on performance benchmark results of this load balancer.

Thanks for Reading !

Originally published at https://venkat2811.blogspot.com/2016/08/http-load-balancer-on-top-of-wso2.html on Aug 18, 2016.